Combining Statistics, SiteCatalyst, and Test&Target for Massive Conversion Lift

Both SiteCatalyst and Test&Target are amazing and powerful tools that allow a digital marketer to better understand the traffic on their site and test their marketing ideas among groups of users. However, business users often wonder how to make sense of the titanic amount of data that is collected and actually use it to increase conversion on their site. What I’m going to show you today is that you can actually build a statistical model to find the users who are most likely to complete a conversion using SiteCatalyst, and then target those exact individuals using Test&Target to really get some amazing conversion lift!

To explain how this works, let me break the process down into the following steps that I’ll explain in more detail:

1. Define your most important conversion event

First, you’ll need to define what constitutes your most important conversion event. This can be a purchase, or perhaps a subscription, or a form that you’d like your users to fill out. This event needs to be measured in SiteCatalyst and can be measured using a success event, eVar, or prop.

2. Construct a statistical model to find those users who are most likely to convert

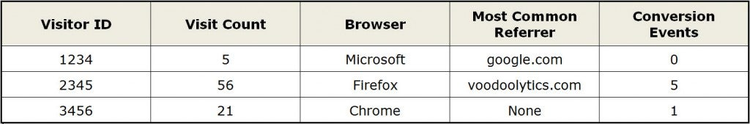

Next, Adobe Consulting can help you create a special dataset that can be used to build a machine learning model that can forecast likelihood of conversion. This model will use the conversion event you defined earlier with all the additional SiteCatalyst data collected around each visitor to your site. Basically, the dataset will look something like this:

http://blogs.adobe.com/digitalmarketing/wp-content/uploads/2012/09/decision-tree.jpg

Each row in the dataset represents the summary of an individual user’s behavior over a given date range, and can include any data that you’re recording as inputs to the model. The model (in our case a decision tree model) will use all of these users’ attributes as inputs, and it will spit out the probability that a given user will convert in the future. If you’ve never heard of a decision tree before, you can read all about decision trees here. The output of a decision tree looks something like this:

https://blog.adobe.com/media_5caec665b52bbf7d67a395178abcdd124983c168.gif

This decision tree is fabricated, but it’s easy to see how it creates groups of users that are more or less likely to complete a conversion event based on their behavior. The reason that a decision tree is a great model is because it results in a set of output rules that can be used as segment definitions in SiteCatalyst or for targeting purposes in Test&Target. For the example above, we could set up a segment “Likely Converters” that had the following segment definition:

https://blog.adobe.com/media_e9edbc0b2c681d357bf9d377d8a61367623627d8.gif

This SiteCatalyst segment would allow you to report on users who were most likely to complete your conversion event. Likewise, you could also setup a SiteCatalyst segment to report on users that were unlikely to convert.

3. Adobe consulting can import these likely converters into a Test&Target profile

Oftentimes, valuable visitor segments are defined by things they did not do over a long period of time. This makes targeting very difficult using Test&Target since a visitor profile expires two weeks after the last mbox they visited. To work around this problem, Adobe Consulting has created a process to import these users’ IDs into a Test&Target profile. This solution opens new and exciting targeting possibilities at the individual visitor level that just weren’t possible before.

4. Create some targeted marketing campaigns and test them against likely converters to achieve maximum lift

Now that we know the users who are most likely to complete your conversion event (or even those who won’t), you can create a marketing campaign aimed specifically at those individuals to entice even greater conversion success.

An important point to note is that no model can guarantee that a user will perform or respond better to a campaign (even though our model suggests they will). For this reason, it’s important to test this segment to see if your predictive model was effective. For example, give 50% of likely converters your campaign and see how they compare to the other 50% of likely converters who do not see the campaign.

To summarize, here’s what the process flow looks like:

https://blog.adobe.com/media_18917cca8a858ac51fc9abb891ba80ddf33d3299.gif

Several Adobe Consulting customers have begun using this process (or have a similar one) and have already seen substantial success. If you’re interested in using statistical models to guide your testing efforts, contact your sales rep or account manager soon!