A World of Creative Possibilities with Augmented Reality UX

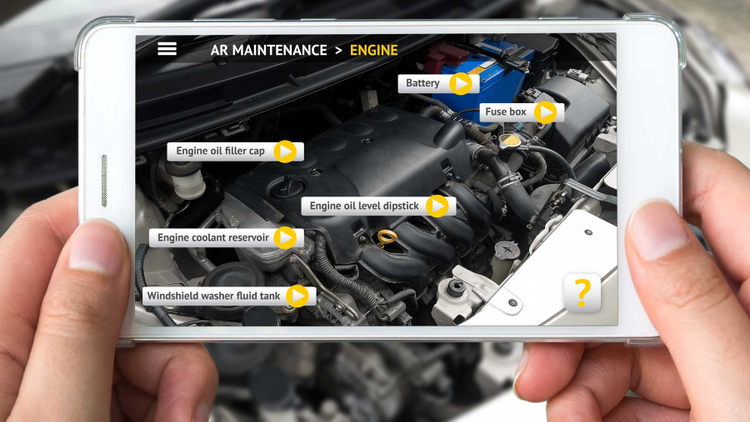

I recently went on a trip to Isle of Wight, a beautiful island in the south of England. Unfortunately, while waiting to board the ferry, my car broke down and I had to call road assistance to check what was wrong with the car. The diagnostics took well over an hour as the mechanic they sent didn’t recognize some parts of the dashboard and couldn’t establish which sensor was affected. He had to pull each one out, check some code on it, call a more knowledgeable colleague and then try another.

I remember thinking how easy it would’ve been if he could simply recognize the sensor type just by looking at it and its position relative to others.

It made me think of Hololens, Microsoft’s holographic computer. If he used it or a similar device, he could’ve seen all the information about the sensors displayed right next to them. This alone, with no interaction whatsoever would’ve saved him a lot of time.

This augmented reality interface, where tasks could be accomplished using contextual information collected by a system, would be amazing from an UX perspective.

What augmented reality does, is connect digital and physical experiences to offer the user real-time feedback on what they are doing. With its help, instead of just seeing information on a screen, and having to provide explicit commands to act on the output, users can interact with the real world and some programmed interactive elements in order to manipulate the outcomes.

There are a lot more practical examples of how AR could help improve the user experience though. Personally I think the retail sector is the one where augmented reality will gain more traction at the beginning. AR will totally transform how people shop by bringing the online and offline shopping experiences together. Imagine going to a store and every time you see a certain product, tags appear next to it, showing the price, description, measurements and reviews. Or, for clothing we could see a real life size model with various body sizes that we can try clothes and shoes on.

But what gets me most excited about AR is not necessarily the various possible areas of implementation. The real opportunity for UX designers comes in the form of creative freedom. It’s the freedom to define UX patterns and guidelines as there is little to no design precedent for this technology. We can define best practices that evolve as augmented reality does.

Think about it. At its core, UX designers create solutions that help users do specific tasks or reach their goals in a non-distracting way. Jared Spool famously wrote “Good design, when it’s done well, becomes invisible. It’s only when it’s done poorly that we notice it.”

Nowadays, we have a lot of UX patterns and best practices that influence every component library available. In the web and mobile world, components behave similarly from one app to another, they follow similar guidelines and visual languages due to years of testing and refining. In consequence, as more and more people use these apps and websites everyday, the design becomes almost invisible.

We need to create and apply similar principles to AR content as well. To create elements that will feel almost like part of the natural environment — visible, but not distracting.

In AR, we have no patterns yet, everything released is just a prototype waiting to be tested. The new ideas that big companies are playing with are just tests to see what will gain traction. For example Amazon is exploring opening AR furniture and electronics stores and Alibaba is investing in creating AR dashboards for cars. Now there are hundreds of initiatives from both large and medium-sized companies and all of them are starting from assumptions that users need a specific service that AR can improve. They invest largely in testing out these assumptions as they need to see AR’s full potential in relation to the current tech environment.

These concepts mostly use UI elements influenced by the web and mobile world. This causes a perception problem, because it’s obvious these elements are not part of the real world. And we, as designers, need to address the mismatch between them and the reality we see. As we’re not limited to screens anymore, we can design virtual images that take into account many variables such as depth, shadow, lighting etc.

So what can we use as an extra source of inspiration for creating these AR experiences? The design of video games plays a huge part in showing how elements can be placed on top of the real world. Video games have taught us how to expect items to be placed on the screen relative to the real world. This is a start, but it’s not the norm. At least not yet.

I see this in almost all the concepts that are appearing these days, including the above mentioned Alibaba investment. Wayray’s Navion displays virtual indicators right on the road ahead, and they don’t require any headgear or eyewear.

http://blogs.adobe.com/creativecloud/files/2017/06/image3-1.jpg

http://blogs.adobe.com/creativecloud/files/2017/06/image1.png

In the video game “Need for Speed” we have controls placed in the corners of the screen so that they don’t obstruct the view, but how can we know which dashboard elements are most relevant for the driver to see at all times? By showing the RPM gauge we occupy more windshield estate, but if we only show the speed, we ignore the use case when drivers want to improve the car’s performance and fuel efficiency.

So how would the process look like? The UX process is the same iterative process that we have followed up until now: define, ideate, prototype, test and refine. But this time, both the ideation and prototyping steps need to be reinvented as there are no patterns to follow. And after the concepts are created, it’s a matter of testing and refining until we get it right.

The future of design looks incredibly promising, as augmented reality is here to stay. As we learn by testing patterns, every new usability finding will contribute largely to this technology’s development.