Seeing is believing: It's time to restore trust in online media

Recently, an image began circulating the web depicting Pope Francis walking around in a chic-looking white puffer jacket. Within hours, it went viral, with people both confused and simultaneously applauding the 86-year-old leader’s sense of style. Turns out, the image was fake — generated completely using AI.

While this example was relatively harmless, the next ones won’t be. The ability to create convincing synthetic images of political leaders, celebrities, even people you know, in the span of just seconds is at our fingertips. And now AI power has come to audio-generating tools, letting people create realistic-sounding clips of anyone saying anything, just by uploading a few minutes of their voice. Across all types of content, our inability to tell the difference between fact and fiction is right around the corner.

The danger of synthetic media is that seeing is believing. While we may have been trained to be more skeptical of the written word when we don’t know or trust the source material, we tend to trust what we see and hear as being “real”. Fifty percent of the brain is dedicated to visual processing, causing us to naturally focus our attention on content like images. One MIT study showed that we can process an image in just 13 milliseconds. That’s not much time for reflection.

But we can take advantage of our nature: “seeing is believing” is exactly what we need in a solution to fight the dangers of misinformation at scale. The right solution will enable content creators who want to be trusted to show their work so that we can believe what’s real.

Show your work, restore trust

This approach is the basis of the Content Authenticity Initiative (CAI), whose global coalition has grown to more than 1,000 members from all areas of technology, media, and policy since its founding in 2019. This overwhelming support in just four years demonstrates the urgent need for a global solution to misinformation.

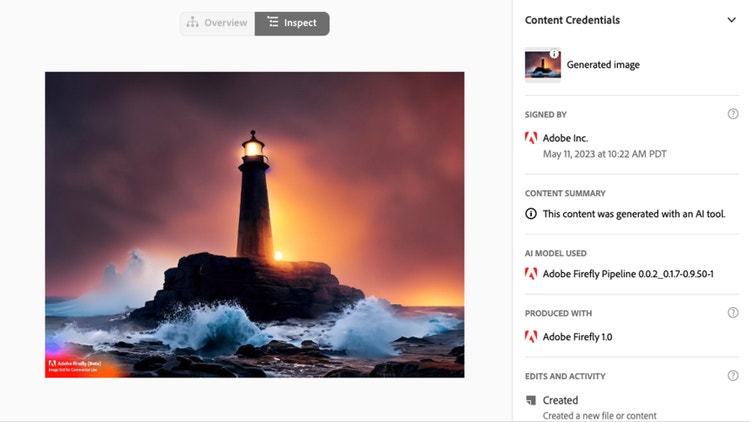

The CAI brings transparency to digital content via metadata accompanying each image called Content Credentials. Content Credentials are essentially a nutrition label for images, which show you what you are consuming when you consume it. They can show information such as name, date, and what tools were used to create an image, as well as any edits that were made to the image along the way. This way, when you see the content, you can see for yourself how it came to be. This solution removes any gatekeeper judgement from the process of believing. Instead, by providing information directly to the public, it allows people to form their own independent opinion about who and what to trust.

Content Credentials can also indicate when an image was generated with AI. In Adobe’s generative AI model Firefly, this information is automatically displayed. Just recently, other generative AI developers, including Stability AI and Spawning AI have agreed to this approach and joined the CAI, helping bring more transparency to all types of digital content, whether created by a human or by AI.

Content Credentials for an image created in Firefly indicate that the image was generated with an AI tool.

Most importantly, Content Credentials are a free, open-source technology that anyone can incorporate into their own products and platforms, based on an open standard created by the Coalition for Content Provenance and Authenticity (C2PA). Recent implementations of the C2PA standard demonstrate the potential this technology holds.

For example, photojournalist Ron Haviv worked with Starling Labs to add Content Credentials to his images. Now the images, featured in Rolling Stone, come with verified context attached to them so viewers can easily tell the images are from the 1992 Bosnian War, and not from the ongoing war in Ukraine, as they were incorrectly purported to be. Leading camera manufacturers Leica and Nikon are also building the C2PA standard directly into cameras to document essential information, like name, date, camera model and manufacturer, even image content, to drive transparency and authenticity from the moment an image is captured.

But in order for this solution to work, everyone needs to adopt it. We need Content Credentials where we capture, create and distribute content. So while the CAI has reached 1,000 members, there is more work ahead.

Adopting Content Credentials to protect election integrity

Misinformation is often used to portray events or people a certain way for political gain, and even without bad intent, fake images can cause people to form political opinions based on inaccuracies or untruths. As the 2024 presidential elections draw nearer, we can only expect this problem to grow. And we risk people losing faith in the facts and quite possibly in the election process altogether. The first time people see misinformation, they will be deceived. The second time, they won’t believe anything they see or hear, even if it’s true. At that point, our whole democracy is in danger if no one has a trusted way of obtaining facts.

A collective commitment to responsible innovation

Misinformation is not new, but the potential for misuse of AI has made the problem more dire and the need for a solution more urgent. The good news is we already have a working solution that, with private and public collaboration, can make a real difference in the fight against misinformation. Immediately.

Now the onus is on us: Everyone from tech companies, to camera companies, to news outlets, to social media platforms should implement this technology. Governments should work to drive transparency standards across industries and educate the public on both the dangers of misinformation and the tools at our disposal to fight it. And all of us should demand this level of transparency in our digital content. Having a solution means we are part of the problem if we don’t act.

When you hear people call for responsible innovation, this is it. This is how we realize the incredible power of AI while taking the necessary steps to ensure it doesn’t spiral out of control in the hands of bad actors. Democracy is on the line and we all have a role to play to protect it.