Amazon Robotics combines the power of NVIDIA Omniverse and Adobe Substance 3D to simulate warehouse operations

Image courtesy of Amazon Robotics.

The increasing importance of AI and synthetic data to run simulation models comes with new challenges. One of these challenges is the creation of massive amounts of 3D assets to train AI perception programs in large-scale real-time simulations.

This applies to a wide variety of industries, such as autonomous driving, blueprint editing in architecture, defect detection in manufacturing, and much more. In this article, we will learn how Amazon Robotics tackles this challenge for warehouse operations by leveraging the procedural power of Substance 3D combined with NVIDIA Omniverse.

In late 2021, the team adopted Adobe Substance 3D, Universal Scene Description (USD), and NVIDIA Omniverse, a software platform for connecting 3D pipelines and developing advanced, real-time 3D applications, to enhance the development of 3D environments. Comprised of software development engineers and technical artists, the team collaborates to create diverse models representing numerous solutions within Amazon.

The challenge of visualizing and running these simulations lies in the creation of thousands of digital twins, i.e., virtual packages. To automate this process, the team adopted Substance 3D and Houdini to create thousands of random, procedurally generated assets.

Joining us today are Haining Cao and Hunter Liu from the Virtual Systems team, who will discuss the integration of Substance 3D and Omniverse.

The Virtual Systems team

Haining: Hi, my name is Haining Cao. I have been with Amazon Robotics for one and half years as a texturing artist.

Hunter: Hi, my name is Hunter Liu and I work for Amazon Robotics as a technical artist mainly focused on our art pipeline and procedural content creation.

The Virtual Systems Team collaborates on a wide range of projects, encompassing both extensive solution-level simulations and individual workstation emulators as part of larger solutions. To describe the 3D worlds required for these simulations, the team utilizes USD. One of the team’s primary focuses lies in generating synthetic data for training machine learning models used in intelligent robotic perception programs.

Haining: A recent project involved creating procedurally randomizable boxes from Amazon and other vendors. Our team can run simulations in 3D environments with large quantities of completely procedural boxes which saves time and money. We use Houdini, a powerful software tool capable of performing mesh modifications, for procedural mesh generation, and Substance 3D Designer for procedural texture generation and loading them into Omniverse. Here is an example of a sample we generated.

Another project we are working on is recreating one of the Amazon warehouses in 3D for various simulations and testing. This is a huge project because there is a large quantity of different objects in the warehouse, and we must incorporate variations in conditions on equipment and infrastructure to mimic real-life situations.

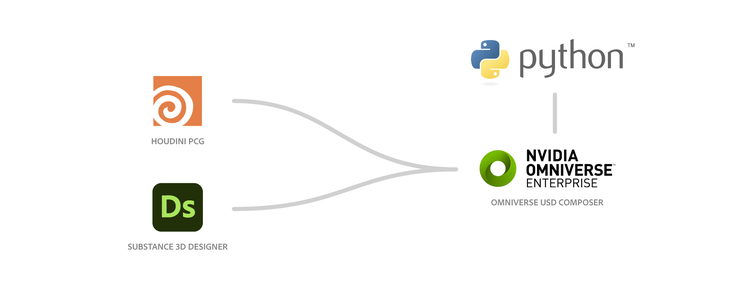

Pipeline overview

Haining: In the past year, we have developed multiple workflows by leveraging various DCC tools and NVIDIA Omniverse. As mentioned previously, we have recently designed a new pipeline dedicated to creating a representative dataset of ASIN (Amazon Standard Identification Number) packaging. Considering the vast range of products sold by Amazon, it would have been extremely challenging to include every single item in the dataset. Therefore, our goal was to develop a workflow that would allow us to automate the generation of diverse types of boxes and apply different materials to them.

Through this process, we have successfully produced boxes with countless variations in terms of shape, size, color, and texture, resulting in thousands of unique combinations. This dataset will play a crucial role in training data perception models with randomized inputs.

Haining: For the box generation, I work on the procedural material using Substance 3D Designer. I think Substance 3D Designer is a powerful tool and the learning curve is not extremely steep. The node graph makes it clear and easy to use, and Designer allows me to build custom materials that can batch-generate thousands of various textures with one Substance graph.

Usually, I start with meeting the internal customers to understand their specific needs for the project. For example, what types of variations they need on the materials (color, roughness, specific types of damage, etc.), then I’ll ask them to provide any reference photos they have.

From there, I’ll start with the base cardboard or the paper material, then layer in the decors (stickers and logos), and at the end add in the damage. Some of the patterns are built using the tile generator, a tool for random patterns in Designer.

After the material is done, depending on the project, I’ll export it as a SBSAR into Omniverse or hand it off to Hunter and he can randomize the textures in Houdini using the Substance material.

Hunter: In our most complex synthetic data projects, we employ scripting techniques to streamline the processing of Illustrator files in batches. By extracting pertinent information from these inputs, we can effectively translate the designs into 3D structures. To achieve this, we leverage Houdini. Then we integrate a Substance 3D file by incorporating it through a Substance node within the Houdini pipeline. This step ensures the completion of our data generation process.

To introduce further variations, we utilize PDG (Procedural Dependency Graph) within Houdini. PDG enables us to efficiently batch process multiple variations, transforming the Illustrator files into distinct meshes and textures.

Once the synthetic data generation pipeline is complete, we publish the results to NVIDIA Omniverse. Now we have both the procedural asset and material, and we can change parameters directly inside Omniverse. We use Python to trigger randomized values for both mesh and material.

Through this integration, we can share and collaborate on the synthetic data generated within the Omniverse ecosystem.

The Substance 3D toolset

Hunter: Substance 3D offers several strengths that make it a valuable tool in texture creation.

- First, it provides high-level control, allowing us to precisely design and manipulate specific features of the texture. This level of control ensures that we can achieve the desired aesthetic and detail in their textures.

- Second, Substance 3D employs a non-destructive workflow, enabling us to iterate and make changes to textures without losing any of the previous work. This flexibility allows for efficient experimentation and refinement during the creative process.

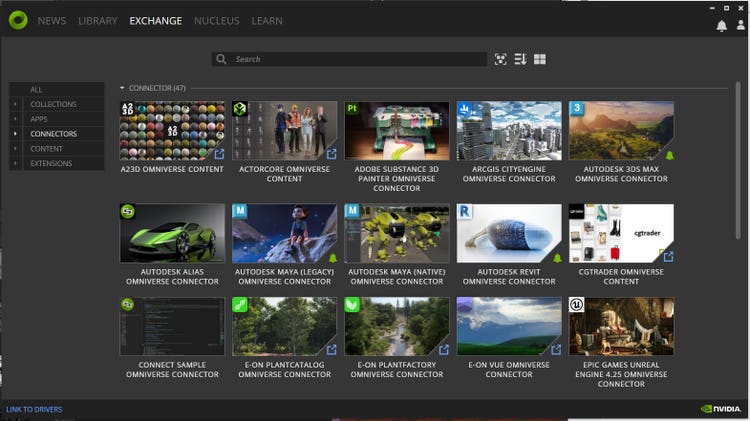

- Substance 3D also integrates seamlessly with the Substance Engine, offering pre-built bridges for software tools like Houdini and the NVIDIA Omniverse platform. This integration facilitates easy transfer and modification of texture files, streamlining the workflow and enhancing efficiency.

- Furthermore, Substance 3D Designer includes a texture baking feature, which enables us to generate various types of texture maps, such as normal maps and ambient occlusion maps, from high-resolution meshes. This capability is particularly beneficial for creating simulation-ready content and optimizing performance.

These features empower us to create intricate and detailed textures while maintaining flexibility, efficiency, and compatibility with other software tools.

Substance integration in NVIDIA Omniverse

Hunter: In our workflow, we primarily rely on pre-baked assets to maintain efficiency and consistency. However, we also take advantage of the Substance native integration within Omniverse to introduce dynamic variations into specific assets.

For example, let’s consider the case of cardboard, which can display various types of wear and tear. By utilizing the integration, we can trigger variations in the visual appearance of the cardboard during the rendering process in Omniverse. As a result, each rendered image will showcase a unique cardboard element with distinct types of damage.

This approach allows us to generate a wide range of realistic visual representations, enriching the authenticity and depth of our synthetic data. By incorporating dynamic variations through the Substance integration, we ensure that the rendered images exhibit a level of variability that faithfully represents real-world scenarios.

The power of NVIDIA Omniverse

Hunter: Omniverse serves as an invaluable platform for managing and working with our digital twins. The ability to directly work with USD and seamlessly modify models using our preferred DCC tools significantly simplifies our workflow, especially when utilizing Omniverse Nucleus as our asset repository. Furthermore, Omniverse empowers us to build custom tools and workflows using Python and C++, allowing us to develop applications to address specific customer needs.

However, one of the most transformative aspects lies in the simulation-specific extensions bundled with NVIDIA Isaac Sim, a robotics simulation toolkit built on Omniverse. These extensions enable us to generate synthetic data for training perception models and perform live simulations using robotic manipulators, lidar, and other sensors. The integration with Substance materials even allows us to modify material parameters during rendering, further enhancing our capabilities.

Additionally, Omniverse Nucleus plays a vital role in facilitating team collaboration. It allows our team to swiftly adapt to new designs and easily share them with different members for review. The version control feature also enhances our control over 3D assets, enabling us to conveniently manage revisions. If issues arise, we can effortlessly revert to previous versions, ensuring smooth asset management and minimizing risks.

Additionally, Omniverse Nucleus plays a vital role in facilitating team collaboration. It allows our team to swiftly adapt to new designs and easily share them with different members for review. The version control feature also enhances our control over 3D assets, enabling us to conveniently manage revisions. If issues arise, we can effortlessly revert to previous versions, ensuring smooth asset management and minimizing risks.

The NVIDIA and Adobe ecosystems

Haining: NVIDIA and Adobe are very big innovators in the industry and their products continue to evolve to help artists like us do our job faster and easier. This motivates us to learn their new tools, keeping us at the forefront of the 3D industry.

Extended credit goes to Brian Basile, Jie Chen, Katherine Hecht, Jenny Zhang, Lucas Tomaselli and Divyansh Mishra from the the Amazon Robotics Virtual Systems Team.

When Amazon Robotics was faced with the double challenge of generating synthetic data models for thousands of assets and running simulations for Amazon’s warehouse operations, the use of Substance, Houdini, and Omniverse together was a game-changer. Using Substance 3D and Houdini to randomly generate thousands of procedural assets allowed a small team of 3D designers to create a streamlined and automated process. Thanks to the integration and compatibility of these tools with NVIDIA Omniverse USD Composer, the team was able to successfully run high-fidelity simulations to train their machine learning and data models in much less time.

A huge thanks to Haining Cao, Hunter Liu, Jie Chen, Basile Brian, and the entire Amazon Robotics team for teaching us about their assets pipeline, and to the NVIDIA team for their collaboration.

Learn more about Adobe Substance 3D.